How To Make A Camera Orbit A Charicter C#

Orbit Camera

Relative Command

- Create an orbiting camera.

- Support manual and automatic camera rotation.

- Make movement relative to the camera.

- Prevent the camera from intersecting geometry.

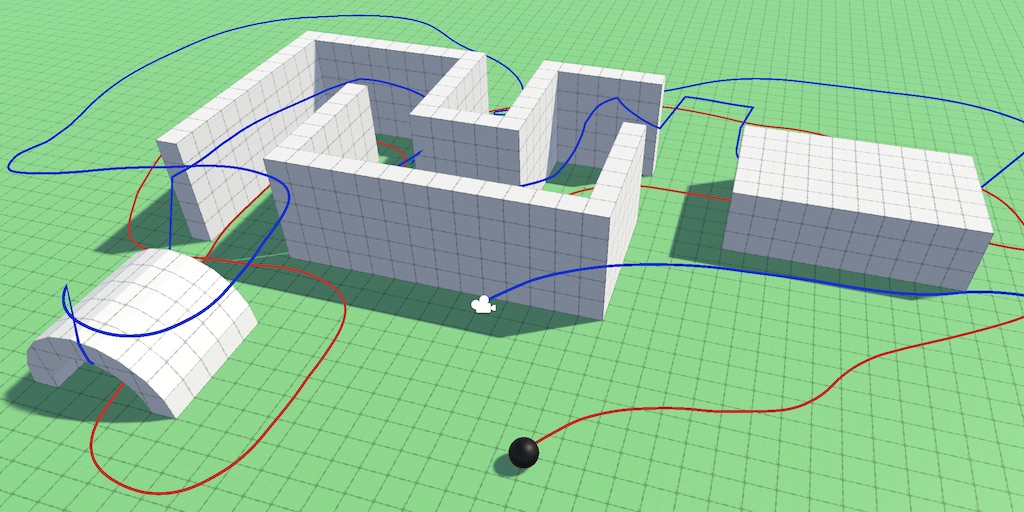

This is the fourth installment of a tutorial series virtually controlling the movement of a character. This time we focus on the camera, creating an orbiting point of view from which we command the sphere.

This tutorial is made with Unity 2019.2.18f1. Information technology also uses the ProBuilder parcel.

Following the Sphere

A stock-still bespeak of view only works when the sphere is constrained to an area that is completely visible. Simply commonly characters in games can roam about big areas. The typical ways to make this possible is past either using a first-person view or having the camera follow the player's avatar in third-person view manner. Other approaches exists also, like switching betwixt multiple cameras depending on the avatar'due south position.

Orbit Camera

We'll create a simple orbiting camera to follow our sphere in third-person manner. Ascertain an OrbitCamera component type for it, giving it the RequireComponent aspect to enforcing that it is gets attached to a game object that also has a regular Camera component.

using UnityEngine; [RequireComponent(typeof(Photographic camera))] public class OrbitCamera : MonoBehaviour {} Adjust the main camera of a scene with a single sphere and then it has this component. I made a new scene for this with a big flat plane, positioning the camera so information technology looks down at a 45° angle with the sphere at the middle of its view, at a distance of roughly v units.

Maintaining Relative Position

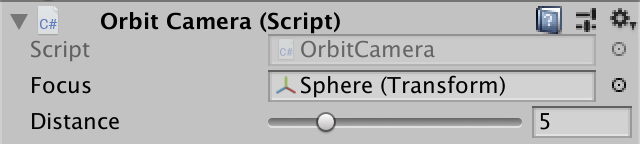

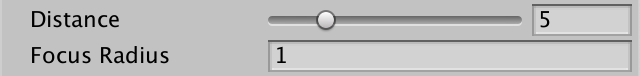

To keep the photographic camera focused on the sphere we need to tell information technology what to focus on. This could actually be anything, so add together a configurable Transform field for the focus. Also add an pick for the orbit distance, fix to five units past default.

[SerializeField] Transform focus = default; [SerializeField, Range(1f, 20f)] float distance = 5f;

Every update nosotros take to accommodate the camera's position then it stays at the desired distance. We'll do this in LateUpdate in instance anything moves the focus in Update. The camera's position is plant past moving information technology away from the focus position in the opposite direction that it'southward looking by an amount equal to the configured distance. We'll use the position property of the focus instead of localPosition so we tin can correctly focus on child objects inside a hierarchy.

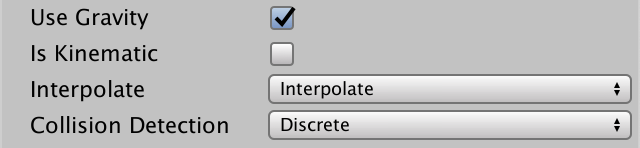

void LateUpdate () { Vector3 focusPoint = focus.position; Vector3 lookDirection = transform.forward; transform.localPosition = focusPoint - lookDirection * distance; } The camera will not ever stay at the same distance and orientation, but considering PhysX adjusts the sphere'southward position at a fixed time footstep so will our camera. When that doesn't match the frame rate information technology will issue in jittery camera move.

The simplest and almost robust fashion to fix this is by setting the sphere'due south Rigidbody to interpolate its position. That gets rid of the jittery motion of both the sphere and the camera. This is typically only needed for objects that are focused on past the camera.

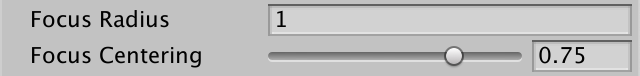

Focus Radius

Always keeping the sphere in exact focus might feel as well rigid. Even the smallest motility of the sphere volition be copied by the camera, which affects the entire view. We tin relax this constraint by making the camera merely move when its focus point differs too much from the ideal focus. We'll make this configurable by calculation a focus radius, ready to to 1 unit by default.

[SerializeField, Min(0f)] bladder focusRadius = 1f;

A relaxed focus requires usa to go along rail of the current focus signal, as it might no longer exactly lucifer the position of the focus. Initialize it to the focus object'south position in Awake and motion updating information technology to a divide UpdateFocusPoint method.

Vector3 focusPoint; void Awake () { focusPoint = focus.position; } void LateUpdate () { //Vector3 focusPoint = focus.position; UpdateFocusPoint(); Vector3 lookDirection = transform.forwards; transform.localPosition = focusPoint - lookDirection * altitude; } void UpdateFocusPoint () { Vector3 targetPoint = focus.position; focusPoint = targetPoint; } If the focus radius is positive, cheque whether the altitude between the target and current focus points is greater than the radius. If and then, pull the focus toward the target until the distance matches the radius. This can be done by interpolating from target point to electric current point, using the radius divided past current distance every bit the interpolator. Otherwise directly set the focus betoken to the target point as before.

Vector3 targetPoint = focus.position; if (focusRadius > 0f) { float distance = Vector3.Distance(targetPoint, focusPoint); if (distance > focusRadius) { focusPoint = Vector3.Lerp( targetPoint, focusPoint, focusRadius / distance ); } } else { focusPoint = targetPoint; } Centering the Focus

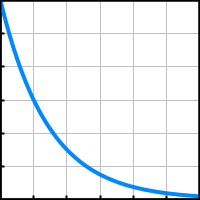

Using a focus radius makes the camera respond simply to larger move of the focus, but when the focus stops so does the camera. Information technology'southward also possible to keep the camera moving until the focus is dorsum in the center of its view. To make this move appear more subtle and organic nosotros can pull back slower as the focus approaches the middle.

For case, the focus starts at some distance from the center. We pull it back and then that later on a second that distance has been halved. We continue doing this, halving the distance every 2d. The distance will never exist reduced to cypher this fashion, just we tin stop when information technology has gotten modest plenty that it is unnoticeable.

Halving a starting altitude each 2nd tin be done by multiplying it with ½ raised to the elapsed time: `d_(n+1) = d_n(1/2)^(t_n)`. We don't need to exactly halve the altitude each second, nosotros tin use an arbitrary centering cistron betwixt zero and one: `d_(n+1)=d_nc^(t_n)`.

Add a configuration pick for the focus centering factor, which has to be a value in the 0–1 range, with 0.5 as a expert default.

[SerializeField, Range(0f, 1f)] bladder focusCentering = 0.5f;

To apply the expected centering beliefs we have to interpolate between the target and electric current focus points, using `(ane-c)^t` as the interpolator, with aid of the Mathf.Pow method. We only need to do this if the distance is large plenty—say to a higher place 0.01—and the centering factor is positive. To both middle and enforce the focus radius nosotros employ the minimum of both interpolators for the final interpolation.

float distance = Vector3.Altitude(targetPoint, focusPoint); bladder t = 1f; if (altitude > 0.01f && focusCentering > 0f) { t = Mathf.Pow(1f - focusCentering, Fourth dimension.deltaTime); } if (distance > focusRadius) { //focusPoint = Vector3.Lerp( // targetPoint, focusPoint, focusRadius / distance //); t = Mathf.Min€(t, focusRadius / distance); } focusPoint = Vector3.Lerp(targetPoint, focusPoint, t); But relying on the normal fourth dimension delta makes the photographic camera subject to the game's time scale, then information technology would also irksome down during slow motion furnishings and even freeze in place if the game would be paused. To prevent this make it depend on Time.unscaledDeltaTime instead.

t = Mathf.Pow(1f - focusCentering, Fourth dimension.unscaledDeltaTime);

Orbiting the Sphere

The side by side pace is to get in possible to adjust the photographic camera's orientation so it can depict an orbit effectually the focus betoken. We'll brand it possible to both manually control the orbit and have the camera automatically rotate to follow its focus.

Orbit Angles

The orientation of the camera can be described with 2 orbit angles. The Ten angle defines its vertical orientation, with 0° looking straight to the horizon and 90° looking straight downwardly. The Y angle defines the horizontal orientation, with 0° looking along the world Z axis. Keep track of those angles in a Vector2 field, prepare to 45° and 0° past default.

Vector2 orbitAngles = new Vector2(45f, 0f);

In LateUpdate we'll now have to construct a quaternion defining the camera'due south look rotation via the Quaternion.Euler method, passing it the orbit angles. It required a Vector3, to which our vector implicitly gets converted, with the Z rotation gear up to zero.

The look direction can and then be establish by replacing transform.forward with the quaternion multiplied with the forward vector. And instead of merely setting the camera'south position we'll at present invoke transform.SetPositionAndRotation with the wait position and rotation in one get.

void LateUpdate () { UpdateFocusPoint(); Quaternion lookRotation = Quaternion.Euler(orbitAngles); Vector3 lookDirection = lookRotation * Vector3.forward; Vector3 lookPosition = focusPoint - lookDirection * distance; transform.SetPositionAndRotation(lookPosition, lookRotation); } Controlling the Orbit

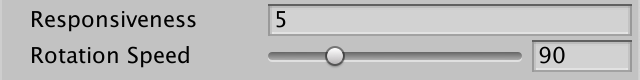

To manually command the orbit, add a rotation speed configuration option, expressed in degrees per second. 90° per second is a reasonable default.

[SerializeField, Range(1f, 360f)] float rotationSpeed = 90f;

Add together a ManualRotation method that retrieves an input vector. I defined Vertical Camera and Horizontal Camera input axes for this, leap to the third and fourth centrality, the ijkl and qe keys, and the mouse with sensitivity increased to 0.5. It is a expert idea to make sensitivity configurable in your game and to let flipping of centrality directions, but nosotros won't carp with that in this tutorial.

If in that location'southward an input exceeding some minor epsilon value like 0.001 then add the input to the orbit angles, scaled past the rotation speed and fourth dimension delta. Again, nosotros brand this independent of the in-game time.

void ManualRotation () { Vector2 input = new Vector2( Input.GetAxis("Vertical Camera"), Input.GetAxis("Horizontal Camera") ); const float e = 0.001f; if (input.x < -e || input.10 > e || input.y < -due east || input.y > due east) { orbitAngles += rotationSpeed * Time.unscaledDeltaTime * input; } } Invoke this method subsequently UpdateFocusPoint in LateUpdate.

void LateUpdate () { UpdateFocusPoint(); ManualRotation(); … } Annotation that the sphere is still controlled in world space, regardless of the camera'southward orientation. And then if yous horizontally rotate the camera 180° then the sphere's controls will appear flipped. This makes it possible to hands keep the same heading no matter the camera view, but can be disorienting. If you have trouble with this y'all can have both the game and scene window open at the same time and rely on the fixed perspective of the latter. We'll make the sphere controls relative to the camera view subsequently.

Constraining the Angles

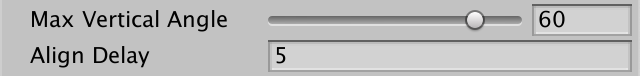

While it's fine for the camera to describe total horizontal orbits, vertical rotation will turn the world upside down once information technology goes beyond 90° in either direction. Even earlier that point it becomes hard to meet where y'all're going when looking mostly upwardly or down. Then permit's add configuration options to constrain the min and max vertical bending, with the extremes express to at well-nigh 89° in either management. Allow's utilize −30° and 60° as the defaults.

[SerializeField, Range(-89f, 89f)] bladder minVerticalAngle = -30f, maxVerticalAngle = 60f;

The max should never driblet below the min, so enforce that in an OnValidate method. Every bit this just sanitizes configuration via the inspector, we don't demand to invoke it in builds.

void OnValidate () { if (maxVerticalAngle < minVerticalAngle) { maxVerticalAngle = minVerticalAngle; } } Add a ConstrainAngles method that clamps the vertical orbit bending to the configured range. The horizontal orbit has no limits, but ensure that the bending stays inside the 0–360 range.

void ConstrainAngles () { orbitAngles.ten = Mathf.Clench(orbitAngles.10, minVerticalAngle, maxVerticalAngle); if (orbitAngles.y < 0f) { orbitAngles.y += 360f; } else if (orbitAngles.y >= 360f) { orbitAngles.y -= 360f; } } We but demand to constrain angles when they changed. So brand ManualRotation return whether information technology made a modify and invoke ConstrainAngles based on that in LateUpdate. Nosotros as well merely need to recalculate the rotation if there was a change, otherwise we tin can retrieve the existing one.

bool ManualRotation () { … if (input.x < e || input.10 > eastward || input.y < e || input.y > e) { orbitAngles += rotationSpeed * Time.unscaledDeltaTime * input; return true; } return false; } … void LateUpdate () { UpdateFocusPoint(); Quaternion lookRotation; if (ManualRotation()) { ConstrainAngles(); lookRotation = Quaternion.Euler(orbitAngles); } else { lookRotation = transform.localRotation; } //Quaternion lookRotation = Quaternion.Euler(orbitAngles); … } We must also make sure that the initial rotation matches the orbit angles in Awake.

void Awake () { focusPoint = focus.position; transform.localRotation = Quaternion.Euler(orbitAngles); } Automatic Alignment

A common feature of orbit cameras is that they align themselves to stay behind the player'due south avatar. We'll do this past automatically adjusting the horizontal orbit angle. Merely it is important that the player can override this automatic behavior at all times and that the automated rotation doesn't immediately kicking back in. And then nosotros'll add a configurable align delay, set to five seconds by default. This delay doesn't take an upper jump. If you don't want automatic alignment at all then you tin can simply set a very high delay.

[SerializeField, Min(0f)] bladder alignDelay = 5f;

Continue track of the final time that a transmission rotation happened. One time once more we rely on the unscaled fourth dimension here, not the in-game time.

float lastManualRotationTime; … bool ManualRotation () { … if (input.x < -e || input.x > e || input.y < -e || input.y > east) { orbitAngles += rotationSpeed * Fourth dimension.unscaledDeltaTime * input; lastManualRotationTime = Fourth dimension.unscaledTime; render true; } render simulated; } Then add an AutomaticRotation method that besides returns whether it changed the orbit. It aborts if the current fourth dimension minus the concluding manual rotation time is less than the marshal filibuster.

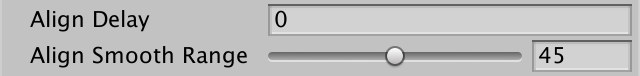

bool AutomaticRotation () { if (Fourth dimension.unscaledTime - lastManualRotationTime < alignDelay) { return simulated; } return true; } In LateUpdate we now constrain the angles and summate the rotation when either transmission or automation rotation happened, tried in that society.

if (ManualRotation() || AutomaticRotation()) { ConstrainAngles(); lookRotation = Quaternion.Euler(orbitAngles); } Focus Heading

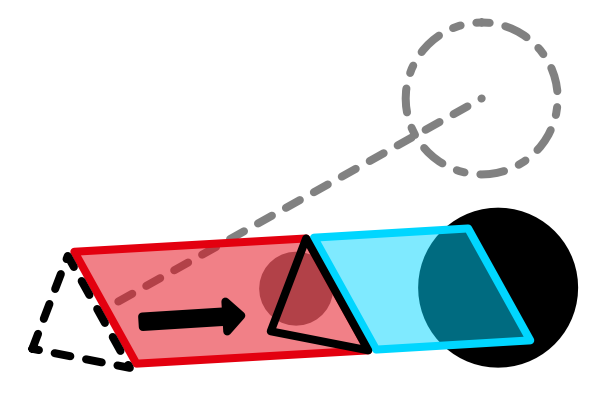

The criteria that are used to align cameras varies. In our example, nosotros'll base it solely on the focus point's movement since the previous frame. The idea is that it makes most sense to wait in the direction that the focus was last heading. To make this possible we'll need to know both the current and previous focus betoken, and then have UpdateFocusPoint set fields for both.

Vector3 focusPoint, previousFocusPoint; … void UpdateFocusPoint () { previousFocusPoint = focusPoint; … } Then take AutomaticRotation calculate the movement vector for the electric current frame. Equally nosotros're just rotating horizontally nosotros only need the second movement in the XZ airplane. If the square magnitude of this move vector is less than a small threshold like 0.0001 so there wasn't much movement and nosotros won't bother rotating.

bool AutomaticRotation () { if (Time.unscaledTime - lastManualRotationTime < alignDelay) { render false; } Vector2 movement = new Vector2( focusPoint.x - previousFocusPoint.x, focusPoint.z - previousFocusPoint.z ); float movementDeltaSqr = movement.sqrMagnitude; if (movementDeltaSqr < 0.000001f) { return simulated; } render true; } Otherwise we have to figure out the horizontal bending matching the electric current direction. Create a static GetAngle method to catechumen a 2D management to an bending for that. The Y component of the direction is the cosine of the angle we need, so put it through Mathf.Acos and then convert from radians to degrees.

static bladder GetAngle (Vector2 management) { float angle = Mathf.Acos(direction.y) * Mathf.Rad2Deg; return bending; } Just that angle could represent either a clockwise or a counterclockwise rotation. Nosotros can await at the X component of the management to know which it is. If Ten is negative then it's counterclockwise and we take to subtract the angle from from 360°.

return management.x < 0f ? 360f - angle : angle;

Back in AutomaticRotation we can apply GetAngle to get the heading angle, passing it the normalized movement vector. Equally we already have its squared magnitude it'due south more than efficient to practise the normalization ourselves. The result becomes the new horizontal orbit bending.

if (movementDeltaSqr < 0.0001f) { render faux; } bladder headingAngle = GetAngle(motion / Mathf.Sqrt(movementDeltaSqr)); orbitAngles.y = headingAngle; return true; Polish Alignment

The automatic alignment works, but immediately snapping to match the heading is too abrupt. Let's slow it down past using the configured rotation speed for automation rotation as well, so it mimics manual rotation. We can use Mathf.MoveTowardsAngle for this, which works similar Mathf.MoveTowards except that it can deal with the 0–360 range of angles.

bladder headingAngle = GetAngle(movement / Mathf.Sqrt(movementDeltaSqr)); float rotationChange = rotationSpeed * Time.unscaledDeltaTime; orbitAngles.y = Mathf.MoveTowardsAngle(orbitAngles.y, headingAngle, rotationChange);

This is better, but the maximum rotation speed is always used, even for small realignments. A more natural behavior would be to make the rotation speed scale with the difference between current and desired angle. Nosotros'll make information technology scale linearly upwardly to some angle at which nosotros'll rotate at full speed. Brand this angle configurable past adding an marshal smooth range configuration option, with a 0–ninety range and a default of 45°.

[SerializeField, Range(0f, 90f)] float alignSmoothRange = 45f;

To make this work we need to know the angle delta in AutomaticRotation, which we can find past passing the electric current and desired angle to Mathf.DeltaAngle and taking the absolute of that. If this delta falls inside the smooth range scale the rotation adjustment accordingly.

bladder deltaAbs = Mathf.Abs(Mathf.DeltaAngle(orbitAngles.y, headingAngle)); float rotationChange = rotationSpeed * Fourth dimension.unscaledDeltaTime; if (deltaAbs < alignSmoothRange) { rotationChange *= deltaAbs / alignSmoothRange; } orbitAngles.y = Mathf.MoveTowardsAngle(orbitAngles.y, headingAngle, rotationChange); This covers the case when the focus moves abroad from the camera, merely nosotros can also exercise it when the focus moves toward the camera. That prevents the photographic camera from rotating away at full speed, changing management each time the heading crosses the 180° purlieus. It works the same except nosotros employ 180° minus the absolute delta instead.

if (deltaAbs < alignSmoothRange) { rotationChange *= deltaAbs / alignSmoothRange; } else if (180f - deltaAbs < alignSmoothRange) { rotationChange *= (180f - deltaAbs) / alignSmoothRange; } Finally, we can dampen rotation of tiny angles a bit more by scaling the rotation speed past the minimum of the time delta and the square movement delta.

float rotationChange = rotationSpeed * Mathf.Min(Time.unscaledDeltaTime, movementDeltaSqr);

Annotation that with this approach it's possible to movement the sphere straight toward the camera without information technology rotating away. Tiny deviations in direction will exist damped besides. Automatic rotation will come into effect smoothly once the heading has been changed significantly.

Camera-Relative Movement

At this betoken we have a decent simple orbit photographic camera. Now nosotros're going to make the player's movement input relative to the camera'due south signal of view.

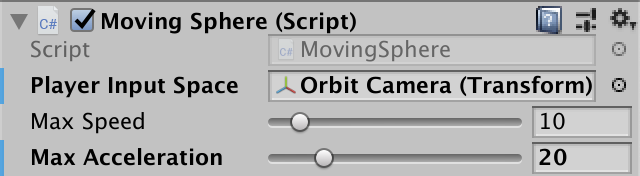

Input Space

The input could be defined in whatever space, not just globe space or the orbit camera's. Information technology can exist any space divers by a Transform component. Add a player input infinite configuration field to MovingSphere for this purpose.

[SerializeField] Transform playerInputSpace = default;

Assign the orbit camera to this field. This is a scene-specific configuration, and then non role of the sphere prefab, although it could exist set to itself, which would make movement relative to its ain orientation.

If the input space is non fix so we keep the player input in world space. Otherwise, we have to catechumen from the provided space to world infinite. We can practice that by invoking Transform.TransformDirection in Update if a player input space is prepare.

if (playerInputSpace) { desiredVelocity = playerInputSpace.TransformDirection( playerInput.ten, 0f, playerInput.y ) * maxSpeed; } else { desiredVelocity = new Vector3(playerInput.x, 0f, playerInput.y) * maxSpeed; } Normalized Management

Although converting to world space makes the sphere move in the correct direction, its forrad speed is affected by the vertical orbit bending. The further it deviates from horizontal the slower the sphere moves. That happens considering we look the desired velocity to prevarication in the XZ plane. We can make it so by retrieving the forrad and right vectors from the player input infinite, discarding their Y components and normalizing them. And so the desired velocity becomes the sum of those vectors scaled past the histrion input.

if (playerInputSpace) { Vector3 forward = playerInputSpace.forwards; forward.y = 0f; forward.Normalize(); Vector3 correct = playerInputSpace.right; correct.y = 0f; correct.Normalize(); desiredVelocity = (frontwards * playerInput.y + right * playerInput.x) * maxSpeed; } Camera Collisions

Currently our photographic camera simply cares about its position and orientation relative to its focus. It doesn't know anything virtually the residue of the scene. Thus, it goes straight through other geometry, which causes a few problems. First, it is ugly. 2d, information technology can cause geometry to obstruct our view of the sphere, which makes it hard to navigate. Tertiary, clipping through geometry tin reveal areas that shouldn't exist visible. We'll begin past only considering the case where the camera's focus distance is ready to zero.

Reducing Look Distance

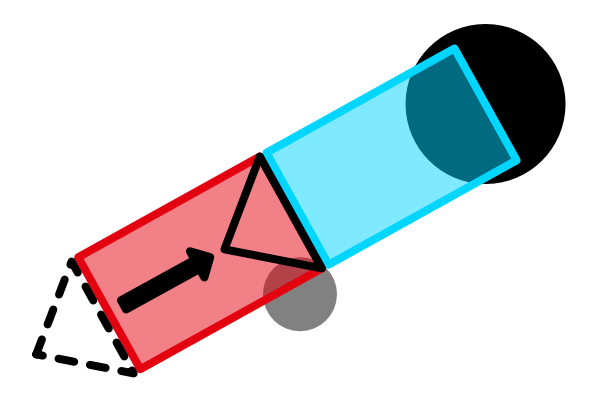

There are various strategies that tin be used to keep the camera's view valid. We'll utilize the simplest, which is to pull the camera forrard along its expect direction if something ends upward in between the camera and its focus point.

The most obvious way to detect a problem is by casting a ray from the focus point toward where we want to place the camera. Do this in OrbitCamera.LateUpdate once we have the look management. If we hit something so we utilise the hit distance instead of the configured altitude.

Vector3 lookDirection = lookRotation * Vector3.forward; Vector3 lookPosition = focusPoint - lookDirection * distance; if (Physics.Raycast( focusPoint, -lookDirection, out RaycastHit hit, distance )) { lookPosition = focusPoint - lookDirection * hit.distance; } transform.SetPositionAndRotation(lookPosition, lookRotation); Pulling the camera closer to the focus point can get it so shut that information technology enters the sphere. When the sphere intersects the photographic camera's well-nigh airplane it can get partially of even totally clipped. You could enforce a minimum distance to avoid this, just that would mean the camera remains within other geometry. At that place is no perfect solution to this, but it tin can exist mitigated by restricting vertical orbit angles, not making level geometry too tight, and reducing the camera'due south near clip plane altitude.

Keeping the Near Plane Clear

Casting a single ray isn't enough to solve the problem entirely. That's because the photographic camera's near plane rectangle can still partially cut through geometry fifty-fifty when in that location is a articulate line between the camera's position and the focus betoken. The solution is to perform a box cast instead, matching the near plane rectangle of the camera in world infinite, which represents the closest thing that the camera tin can meet. It'due south analogous to a camera'due south sensor.

First, OrbitCamera needs a reference to its Camera component.

Camera regularCamera; … void Awake () { regularCamera = GetComponent<Camera>(); focusPoint = focus.position; transform.localRotation = Quaternion.Euler(orbitAngles); } Second, a box bandage requires a 3D vector that contains the half extends of a box, which means half its width, elevation, and depth.

One-half the height can be constitute past taking the tangent of half the photographic camera's field-of-view bending in radians, scaled by its nigh clip plane distance. Half the width is that scaled past the camera'southward attribute ratio. The depth of the box is goose egg. Let'south summate this in a convenient belongings.

Vector3 CameraHalfExtends { become { Vector3 halfExtends; halfExtends.y = regularCamera.nearClipPlane * Mathf.Tan(0.5f * Mathf.Deg2Rad * regularCamera.fieldOfView); halfExtends.x = halfExtends.y * regularCamera.aspect; halfExtends.z = 0f; return halfExtends; } } Now replace Physics.Raycast with Physics.BoxCast in LateUpdate. The half extends has to be added as a second argument, along with the box's rotation as a new fifth argument.

if (Physics.BoxCast( focusPoint, CameraHalfExtends, -lookDirection, out RaycastHit hitting, lookRotation, distance )) { lookPosition = focusPoint - lookDirection * hit.altitude; } The nearly aeroplane sits in forepart of the camera's position, so we should only cast upwardly to that distance, which is the configured altitude minus the camera's almost aeroplane distance. If we end upwardly striking something and then the terminal distance is the hit distance plus the near plane distance.

if (Physics.BoxCast( focusPoint, CameraHalfExtends, -lookDirection, out RaycastHit hitting, lookRotation, distance - regularCamera.nearClipPlane )) { lookPosition = focusPoint - lookDirection * (hitting.distance + regularCamera.nearClipPlane); } Note that this means that the camera'due south position can still end up inside geometry, simply its most plane rectangle will e'er remain outside. Of course this could fail if the box bandage already starts inside geometry. If the focus object is already intersecting geometry it'southward probable the photographic camera will exercise so as well.

Focus Radius

Our electric current approach works, only only if the focus radius is cypher. When the focus is relaxed we can end up with a focus indicate inside geometry, even though the ideal focus point is valid. Thus nosotros cannot expect that the focus point is a valid beginning of the box cast, so we'll take to utilise the ideal focus betoken instead. We'll cast from at that place to the most plane box position, which we find by moving from the photographic camera position to the focus position until we reach the virtually plane.

Vector3 lookDirection = lookRotation * Vector3.forrad; Vector3 lookPosition = focusPoint - lookDirection * distance; Vector3 rectOffset = lookDirection * regularCamera.nearClipPlane; Vector3 rectPosition = lookPosition + rectOffset; Vector3 castFrom = focus.position; Vector3 castLine = rectPosition - castFrom; float castDistance = castLine.magnitude; Vector3 castDirection = castLine / castDistance; if (Physics.BoxCast( castFrom, CameraHalfExtends, castDirection, out RaycastHit hit, lookRotation, castDistance )) { … } If something is hit then nosotros position the box as far away as possible, then we offset to discover the corresponding camera position.

if (Physics.BoxCast( castFrom, CameraHalfExtends, castDirection, out RaycastHit hit, lookRotation, castDistance )) { rectPosition = castFrom + castDirection * hit.distance; lookPosition = rectPosition - rectOffset; } Obstruction Masking

We wrap upwardly by making it possible for the photographic camera to intersect some geometry, by ignoring it when performing the box cast. This makes it possible to ignore small detailed geometry, either for operation reasons or camera stability. Optionally those objects could nevertheless be detected but fade out instead of affecting the camera'south position, but we won't cover that arroyo in this tutorial. Transparent geometry could exist ignored as well. Near chiefly, we should ignore the sphere itself. When casting from inside the sphere it will e'er exist ignored, only a less responsive camera can end up casting from outside the sphere. If it and so hits the sphere the camera would jump to the opposite side of the sphere.

Nosotros control this behavior via a layer mask configuration field, just similar those the sphere uses.

[SerializeField] LayerMask obstructionMask = -1; … void LateUpdate () { … if (Physics.BoxCast( focusPoint, CameraHalfExtends, castDirection, out RaycastHit hit, lookRotation, castDistance, obstructionMask )) { rectPosition = castFrom + castDirection * hitting.distance; lookPosition = rectPosition - rectOffset; } … }

The next tutorial is Custom Gravity.

Source: https://catlikecoding.com/unity/tutorials/movement/orbit-camera/

Posted by: riddlejoincte.blogspot.com

0 Response to "How To Make A Camera Orbit A Charicter C#"

Post a Comment